AI hallucinations: Reasons, costs, and mitigation

Organizations of all kinds, in all industries, are racing to jump on the AI bandwagon. Large-language-model (LLM)-powered, chatbots, search engines, and tools for interacting with customers, users, and others are being deployed at breakneck pace—but in many cases, too little attention is being paid to the various kinds of risk that come with this very new technology.

In a recent blog post, Barracuda software engineer Gabriel Moss provided a detailed account of the ways in which attackers can—and do—use data poisoning and manipulation techniques to corrupt LLMs in ways that can be costly for their owners.

In that article, Gabriel also touched briefly on the problem of AI “hallucinations,” which can also have costly consequences but are not the result of deliberate actions by human attackers.

Would ChatGPT lie to you?

Well, that gets into some philosophical issues, such as whether it is possible to lie without knowing that you’re lying, or even understanding the difference between lies and truth. But it and other LLMs have certainly shown themselves capable of making things up.

As I’m sure you already know, when LLMs produce confabulated responses to queries, they are called hallucinations. Indeed, the Cambridge Dictionary named “hallucinate” their Word of the Year for 2023, as it added a new, AI-related definition of the word.

Unfortunately, AI hallucinations can have some truly harmful consequences, both for organizations deploying LLM chatbots and for their end users. However, there are ways to minimize hallucinations and mitigate the harms they cause.

Why I don’t use LLMs

I once asked an AI chatbot to produce a first draft of a blog post for me. Because it had been trained on information published before 2016, I told it to use the internet to find modern sources of information. And I specifically instructed it to include citations and links to the sources it used.

What I got back was a reasonably good little essay. The writing style was bland but all right. And it cited several news articles and scholarly journals.

But I found that the citations were all invented. Either the sites or the specific articles cited did not exist at all, except for one case, in which the article did exist, but the direct quotation from the article that my chatbot included was nowhere to be found within it.

I was surprised at the time but shouldn’t have been. These systems are primarily driven by the imperative to deliver a response, and there’s no sense in which they understand that it should be factual. So, they can produce citations that are well-formed and plausible, without knowing how citations actually work—that they must accurately cite really existing sources.

I have begun to refer to these LLM chatbots as “plausibility engines.” I keep hoping it will catch on, but so far it hasn’t. In any case, after that incident, I decided I was quite happy at my age to keep on writing the old-fashioned way. But with proper fact-checking and rewriting, I can also see why some writers might derive a lot of value from them.

Costly real-life examples

Not everyone has bothered to check citations in LLM-generated text, sometimes to their detriment. For example, last year two lawyers in the US were fined, along with their firm, when they submitted a court filing in an aviation injury claim.

The problem was, they had used ChatGPT to generate the filing, and it cited nonexistent cases. The judge in the case saw nothing improper about using the LLM to assist in research, but stated—unnecessarily, one would hope—that lawyers must make sure their filings are accurate.

In a similar case, a university professor asked a librarian to produce journal articles from a list that he gave her. But he’d gotten the list from ChatGPT, and the articles it listed did not exist. In this case there was no cost beyond embarrassment.

Other cases are potentially more problematic:

Amazon appears to be selling AI-authored guides to mushroom foraging. What could possibly go wrong?

In its initial public demo, Bing Chat delivered inaccurate financial data about The Gap and Lululemon. Caveat investor! (Google’s first demo of Bard was similarly embarrassing.)

New York City’s AI-powered MyCity chatbot has been making up city statutes and policies, for example telling one user that they could not be evicted for non-payment of rent.

In at least two cases, ChatGPT has invented scandals involving real people (a professor supposedly accused of sexual harassment, and a mayor supposedly convicted of bribery), potentially leading to real harm to those individuals.

How to mitigate the problem

Obviously, fact-checking and human supervision is critical to catching and eliminating AI hallucinations. Unfortunately, that means cutting into the cost savings that these bots deliver by replacing human workers.

Another key strategy is to tightly manage and control the information with which you train your LLM with. Keep it relevant to the LLM’s intended task and limited to accurate sources. This won’t completely prevent it from sometimes putting words together inaccurately, but it reduces the amount of hallucinations produced by the use of unrelated and unreliable content.

More generally, it’s critical to understand the limitations of these systems and not expect too much from them. They absolutely have their place and will likely improve over time (but see below).

Why it’s likely to get worse anyway

Unfortunately, there is one significant reason why the problem of hallucinations is likely to get worse over time: As they create ever more bad output, that data is increasingly going to become part of the next LLM’s training set, creating a self-reinforcing error loop that might quickly get out of hand.

In closing, I’ll just repeat the final paragraph of Gabriel’s blog post that I referenced at the opening:

LLM applications learn from themselves and each other, and they are facing a self-feedback loop crisis, where they may start to inadvertently poison their own and one another’s training sets simply by being used. Ironically, as the popularity and use of AI-generated content climbs, so too does the likelihood of the models collapsing in on themselves. The future for generative AI is far from certain.

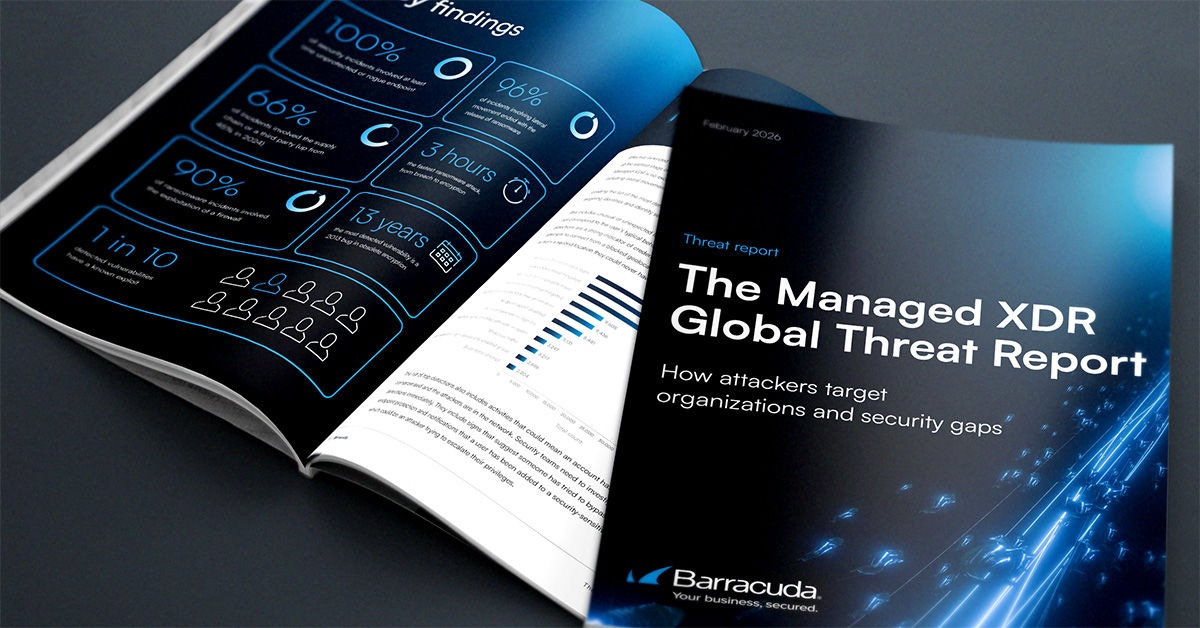

The Managed XDR Global Threat Report

Key findings about the tactics attackers use to target organizations and the security weak spots they try to exploit

Subscribe to the Barracuda Blog.

Sign up to receive threat spotlights, industry commentary, and more.

The Email Security Breach Report 2025

Key findings about the experience and impact of email security breaches on organizations worldwide